You are currently browsing the category archive for the ‘General’ category.

This is just a little exercise in partial differentiation

Introduction

So, you have a password of length from an alphabet

. Suppose you have a choice to increase the length,

, vs the alphabet size

. Which will make your password more secure?

In this sense we will assume that passwords are subject only to random guesses, and so we make the simple assumption that given the data , the larger the number of possible passwords, the more secure the password.

The set of passwords is . This has size

.

Simple Numerics

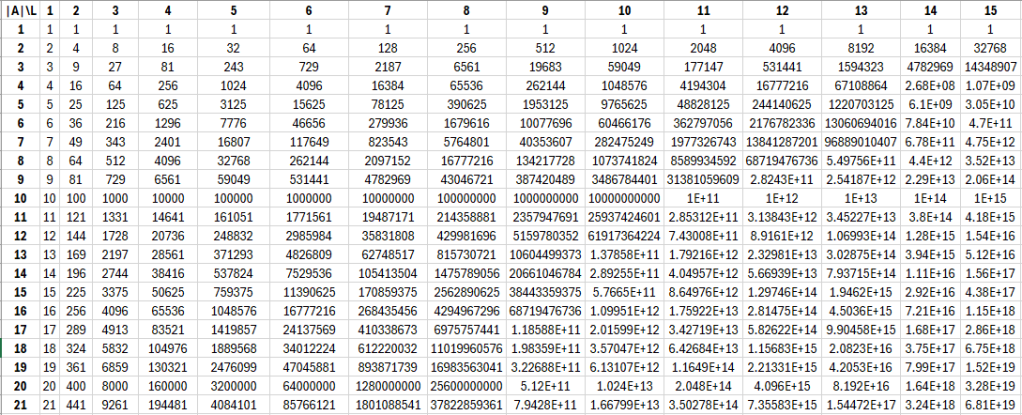

A simple approach is to simply make a table:

An eyeballing of this will tell you that most of time it appears that increasing the length is preferable to increasing the alphabet size. But then again, alphabet size jumps tend to be larger, e.g. 26 to 52, 52 to 62, etc.

Suppose you have a length password from the alphabet

. Are you better off going to length

, or going to the alphabet of size

, with the capital letters and the numebers? As it happens, it depends on

. For

, you should increase the length, but for

, you should double the size of the alphabet. This is typical.

Some analysis

Consider a password from a set of . Consider the two options, for constant

:

- increase the alphabet size:

, or

- increase the password length

.

If we look what these do to the number of passwords:

vs

,

we are comparing to

, and

are constant, we are comparing the exponential function

and the polynomial function

. While increasing the password length initially can do better, as

and particularly

increases, the exponential function speeds past the polynomial function, so eventually, it will make more sense to increase the alphabet size. Our eyeballing has let us down.

For example, from the table above, at and

, it doesn’t appear to be even close, here at

, and

, you get far more security increasing the length.

But for a fixed , there exists a length for which it makes more sense to increase the alphabet by a factor of

. The answer is 61.

So, if you have a length 61 password from an alphabet of size 20, you are better off increasing the alphabet size.

I guess what is relevant here are the following questions: at (adding the special characters), what are the answers?

- If

, and

, you could increase the length of the password rather than jumping to

.

- If

, and

, you could increase the length of the password rather than jumping to

.

- If

, and

, you could increase the length of the password rather than jumping to

.

Going to special characters only makes sense in our framework if there is also a password length condition of 10 or more.

Partial Differentiation

This wasn’t even what I wanted to do here, which was to approximate this question using partial differentiation. In all these questions we are asking about what happens to when we change

and keep

constant and vice versa. So partial differentiation. I guess the problem is

and

are discrete, rather than continuous, but sure

and

are perfectly good functions to differentiate. Let

.

Even I can differentiate with respect to (in fact, on first go I wrote with respect to

here, and got it wrong!):

.

We might use some logs to differentiate with respect to :

.

These partial derivatives estimate that if (a change of

and

,

,

so, approximately, we are left comparing and

. And here we see

vs

… eventually

if both grow, giving us the same answer as before.

I am now available to help students at all levels in the Glanmire and Bishopstown areas.

Contact me on 086-1089437 or jpmccarthymaths@gmail.com.

Alice, Bob and Carol are hanging around, messing with playing cards.

Alice and Bob each have a new deck of cards, and Alice, Bob, and Carol all know what order the decks are in.

Carol has to go away for a few hours.

Alice starts shuffling the deck of cards with the following weird shuffle: she selects two (different) cards at random, and swaps them. She does this for hours, doing it hundreds and hundreds of times.

Bob does the same with his deck.

Carol comes back and asked “have you mixed up those decks yet?” A deck of cards is “mixed up” if each possible order is approximately equally likely:

She asks Alice how many times she shuffled the deck. Alice says she doesn’t know, but it was hundreds, nay thousands of times. Carol says, great, your deck is mixed up!

Bob pipes up and says “I don’t know how many times I shuffled either. But I am fairly sure it was over a thousand”. Carol was just about to say, great job mixing up the deck, when Bob interjects “I do know that I did an even number of shuffles though.“.

Why does this mean that Bob’s deck isn’t mixed up?

I am not sure has the following observation been made:

When the Jacobi Method is used to approximate the solution of Laplace’s Equation, if the initial temperature distribution is given by

, then the iterations

are also approximations to the solution,

, of the Heat Equation, assuming the initial temperature distribution is

.

I first considered such a thought while looking at approximations to the solution of Laplace’s Equation on a thin plate. The way I implemented the approximations was I wrote the iterations onto an Excel worksheet, and also included conditional formatting to represent the areas of hotter and colder, and the following kind of output was produced:

Let me say before I go on that this was an implementation of the Gauss-Seidel Method rather than the Jacobi Method, and furthermore the stopping rule used was the rather crude .

However, do not the iterations resemble the flow of heat from the heat source on the bottom through the plate? The aim of this post is to investigate this further. All boundaries will be assumed uninsulated to ease analysis.

Discretisation

Consider a thin rod of length . If we mesh the rod into

pieces of equal length

, we have discretised the rod, into segments of length

, together with ‘nodes’

.

Suppose are interested in the temperature of the rod at a point ,

. We can instead consider a sampling of

, at the points

:

.

Similarly we can mesh a plate of dimensions into an

rectangular grid, with each rectangle of area

, where

and

, together with nodes

, and we can study the temperature of the plate at a point

by sampling at the points

:

.

We can also mesh a box of dimension into an

3D grid, with each rectangular box of volume

, where

,

, and

, together with nodes

, and we can study the temperature of the box at the point

by sampling at the points

:

.

Finite Differences

How the temperature evolves is given by partial differential equations, expressing relationships between and its rates of change.

We are the mathematicians and they are the physicists (all jibes and swipes are to be taken lightly!!)

A

A is for atom and axiom. While we build beautiful universes from our carefully considered axioms, they try and destroy this one by smashing atoms together.

B

B is for the Banach-Tarski Paradox, proof if it was ever needed that the imaginary worlds which we construct are far more interesting then the dullard of a one that they study.

C

C is for Calculus and Cauchy. They gave us calculus about 340 years ago: it only took us about 140 years to make sure it wasn’t all nonsense! Thanks Cauchy!

D

D is for Dimension. First they said there were three, then Einstein said four, and now it ranges from 6 to 11 to 24 depending on the day of the week. No such problems for us: we just use .

E

E is for Error Terms. We control them, optimise them, upper bound them… they just pretend they’re equal to zero.

F

F is for Fundamental Theorems… they don’t have any.

G

G is for Gravity and Geometry. Ye were great yeah when that apple fell on Newton’s head however it was us asking stupid questions about parallel lines that allowed Einstein to formulate his epic theory of General Relativity.

H

H is for Hole as in the Black Hole they are going to create at CERN.

I

I is for Infinity. In the hand of us a beautiful concept — in the hands of you an ugliness to be swept under the carpet via the euphemism of “renormalisation”…

J

J is for Jerk: the third derivative of displacement. Did you know that the fourth, fifth, and sixth derivatives are known as Snap, Crackle, and Pop? No, I did not know they had a sense of humour either.

K

K is for Knot Theory. A mathematician meets an experimental physicist in a bar and they start talking.

- Physicist: “What kind of math do you do?”,

- Mathematician: “Knot theory.”

- Physicist: “Yeah, Me neither!”

L

L is for Lasers. I genuinely spent half an hour online looking for a joke, or a pun, or something humorous about lasers… Lost Ample Seconds: Exhausting, Regrettable Search.

M

M is for Mathematical Physics: a halfway house for those who lack the imagination for mathematics and the recklessness for physics.

N

N is for the Nobel Prize, of which many mathematicians have won, but never in mathematics of course. Only one physicist has won the Fields Medal.

O

O is for Optics. Optics are great: can’t knock em… 7 years bad luck.

P

P is for Power Series. There are rules about wielding power series; rules that, if broken, give gibberish such as the sum of the natural numbers being . They don’t care: they just keep on trucking.

Q

Q is for Quark… they named them after a line in Joyce as the theory makes about as much sense as Joyce.

R

R is for Relativity. They are relatively pleasant.

S

S is for Singularities… instead of saying “we’re stuck” they say “singularity”.

T

T is for Tarksi… Tarski had a son called Jon who was a physicist. Tarksi always appears twice.

U

U is for the Uncertainty Principle. I am uncertain as to whether writing this was a good idea.

V

V is for Vacuum… Did you hear about the physicist who wanted to sell his vacuum cleaner? Yeah… it was just gathering dust.

W

W is for the Many-Worlds-Interpretation of Quantum Physics, according to which, Mayo GAA lose All-Ireland Finals in infinitely many different ways.

X

X is unknown.

Y

Y is for Yucky. Definition: messy or disgusting. Example: Their “Calculations”

Z

Z is for Particle Zoo… their theories are getting out of control. They started with atoms and indeed atoms are only the start. Pandora’s Box has nothing on these people.. forget baryons, bosons, mesons, and quarks: the latest theories ask for sneutrinos and squarks; photinos and gluinos, zynos and even winos. A zoo indeed.

PS

We didn’t even mention String Theory!

The End.

A colleague writes (extract):

“I have an assessment with 4 sections in it A,B,C and D.

I have a question bank for each section. The number of questions in each bank is A-10, B-10, C-5, D-5.

In my assessment I will print out randomly a fixed number of questions from each bank. Section A will have 5 questions, B-5, C-2, D-2. 14 questions in total appear on the exam.

I can figure out how many different exam papers (order doesn’t matter) can be generated (I think!).

But my question is: what is the uniqueness of each exam, or what overlap between exams can be expected.?

I am not trying to get unique exams for everyone (unique as in no identical questions) but would kinda like to know what is the overlap.“

Following the same argument as here we can establish that:

Fact 1

The expected number of students to share an exam is .

Let the number of exams .

This is an approach that takes advantage of the fact that expectation is linear, and the probability of an event not happening is

.

Label the 20 students by and define a random variable

by

Then , the number of students who share an exam, is given by:

,

and we can calculate, using the linearity of expectation.

.

The are not independent but the linearity of expectation holds even when the addend random variables are not independent… and each of the

has the same expectation. Let

be the probability that student

does not share an exam with anyone else; then

,

but , and

,

and so

.

All of the 20 have this same expectation and so

.

Now, what is the probability that nobody shares student ‘s exam?

We need students and

— 19 students — to have different exams to student

, and for each there is

ways of this happening, and we do have independence here (student 1 not sharing student

‘s exam doesn’t change the probability of student 2 not sharing student

‘s exam), and so

is the product of the probabilities.

So we have that

,

and so the answer to the question is:

.

We can get an estimate for the probability that two or more students share an exam using Markov’s Inequality:

Fact 2

This estimate is tight: the probability that two or more students (out of 20) share an exam is about 0.003%.

This tallies very well with the exact probability which can be found using a standard Birthday Problem argument (see the solution to Q. 7 here) to be:

The probability that two given students share an exam is

Fact 3

The expected number of shared questions between two students is 6.6

Take students 1 and 2. The questions are in four bins: two of ten, two of five. Let be the number of questions in bin

that students 1 and 2 share. The expected number of shared questions,

, is:

,

and the numbers are small enough to calculate the probabilities exactly using the hypergeometric distribution.

The calculations for bins 1 and 2, and bins 3 and 4 are the same. The expectation

.

Writing briefly , looking at the referenced hypergeometric distribution we find:

and we find:

Similarly we see that

and so, using linearity:

This suggests that on average students share about 50% of the question paper. Markov’s Inequality gives:

,

but I do not believe this is tight.

Calculating this probability exactly is tricky because there are many different ways that students can share a certain number of questions. We would be looking at something like “multiple hypergeometric”, and I would calculate it as the event not-(0 or 1 or 2 or 3 or 4 or 5 or 6).

I think the result is striking enough at this time!

We tell four tales of De Morgan.

In each case we have something that looks like AND, something that looks like OR, and something that looks like NOT.

Sets

The Collection of Objects

Consider a universe of discourse/universal set/ambient set . When talking about people this might be the collection of all people. When talking about natural numbers this might be the set

. When talking about real numbers this might be the set

. When talking about curves it might be the set of subsets of the plane,

, etc.

The collection of objects in this case is the set of subsets of , denoted

.

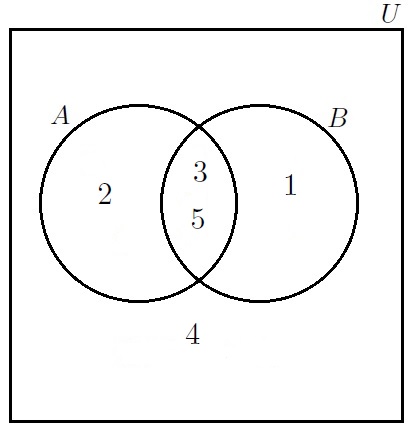

Suppose, for the purposes of illustration, that

.

Consider the subsets , and

.

in the obvious way.

AND

Note that two objects are contained both in AND in

. We call the set of such objects the intersection of

AND

,

:

.

We can represent the ambient set , as well as the sets

and

— and the fact that they intersect — using a Venn Diagram:

We can demonstrate for a general and

‘where’ the intersection is:

Slides of a talk given at CIT School of Science Seminar.

Talk delivered to the Conversations on Teaching and Learning Winter Programme 2018/19, organised by the Teaching & Learning Unit in CIT (click link for slides):

Contexts and Concepts: A Case Study of Mathematics Assessment for Civil & Environmental Engineering

I received the following email (extract) from a colleague:

With the birthday question the chances of 23 people having unique birthdays is less than ½ so probability of shared birthdays is greater than 1-in-2.

Coincidentally on the day you sent out the paper, the following question/math fact was in my son’s 5th Class homework.

We are still debating the answer, hopefully you could clarify…

In a group of 368 people, how many should share the same birthday. There are 16×23 in 368 so there are 16 ways that 2 people should share same birthday (?) but my son pointed out, what about 3 people or 4 people etc.

I don’t think this is an easy problem at all.

First off we assume nobody is born on a leap day and the distribution of birthdays is uniform among the 365 possible birthdays. We also assume the birthdays are independent (so no twins and such).

They were probably going for 16 or 32 but that is wrong both for the reasons given by your son but also for the fact that people in different sets of 23 can also share birthdays.

The brute force way of calculating it is to call by the random variable that is the number of people who share a birthday and then the question is more or less looking for the expected value of

, which is given by:

.

Already we have that (why), and

is (why) the probability that four people share one birthday and 364 have different birthdays. This probability isn’t too difficult to calculate (its about

) but then things get a lot harder.

For , there are two possibilities:

- 5 share a birthday, 363 different birthdays, OR

- 2 share a birthday, 3 share a different birthday, and the remaining 363 have different birthdays

Then is already getting very complex:

- 6 share a birthday, 362 different birthdays, OR

- 3, 3, 362

- 4, 2, 362

- 2, 2, 2, 362

This problem is spiraling out of control.

There is another approach that takes advantage of the fact that expectation is linear, and the probability of an event not happening is

.

Label the 368 people by and define a random variable

by

Then , the number of people who share a birthday, is given by:

,

and we can calculate, using the linearity of expectation.

.

The are not independent but the linearity of expectation holds even when the addend random variables are not independent… and each of the

has the same expectation. Let

be the probability that person

does not share a birthday with anyone else; then

,

but , and

,

and so

.

All of the 368 have this same expectation and so

.

Now, what is the probability that nobody shares person ‘s birthday?

We need persons and

— 367 persons — to have different birthdays to person

, and for each there is 364/365 ways of this happening, and we do have independence here (person 1 not sharing person

‘s birthday doesn’t change the probability of person 2 not sharing person

‘s birthday), and so

is the product of the probabilities.

So we have that

,

and so the answer to the question is:

.

There is possibly another way of answering this using the fact that with 368 people there are

pairs of people.

Recent Comments